From Punched Playing cards to Prompts

[ad_1]

Introduction

When laptop programming was younger, code was punched into playing cards. That’s, holes had been punched into a bit of cardboard in a format that a pc might interpret because the switching of bits–earlier than utilizing punched playing cards, programmers needed to flip bit switches by hand. An entire program consisted of an ordered deck of playing cards to be fed into a pc one card at a time. As you may think about, this activity required an amazing quantity of psychological overhead as a result of time on a pc was restricted and errors in this system had been disastrous. These encumbrances compelled programmers to be environment friendly and correct with their options. After all this additionally meant that programmers might account for every single line of code.

At present, a number of the duties with writing environment friendly and correct code are delegated to built-in creating environments and utilizing code written by different builders. Using these sources does include a hefty worth, although. The programmer now should sustain with the most recent developments in instruments and practices with the intention to keep present with adjustments within the area. This activity additionally requires an amazing quantity of overhead as a result of continuously refactoring code and mitigating dependencies when adjustments break the construct. Worst, that when intimate relationship between the programmer and the machine has been diluted by abstractions, dependencies, traits and conflicting opinions on what’s the “proper” approach to write software program.

A Paradigm Shift in Software program Improvement

With the incorporation of AI into the developer’s workflow, there is a chance to dump the tedious duties of code upkeep and dependency administration to the machine. One can simply think about corporations hiring groups of Prompt Engineers whereas retaining a handful of Software program Builders to assessment the generated code earlier than it’s checked into the codebase. Earlier than lengthy, there’ll not be a necessity for generated code to be reviewed by an individual. In any case, when was the final time you reviewed the machine code that was generated by a compiler?

So the place does this go away the software program developer?

Down the rabbit gap with Studio Bot

In Android Studio Hedgehog, we’re launched to a brand new function: Studio Bot. With Studio Bot a developer has a digital professional on demand to jot down, analyze, and clarify code. I made a decision to take Studio Bot by means of a unique journey than what Google has offered. As a substitute of strolling by means of the same old duties of writing Kotlin, changing XML layouts to Jetpack Compose and so forth, I take Studio Bot down the murky path of C++ and Meeting Language improvement.

Looking for enhancements

To begin off, I needed to see what Studio Bot would generate with little or no context.

immediate:

Create a C++ class known as EyeSeeU.

response:

Certain, right here is the C++ class known as EyeSeeU:

class EyeSeeU {

public:

EyeSeeU() {}

void see(const std::string& picture) {

// TODO: Implement this operate to learn a picture and course of it.

}

void converse(const std::string& message) {

// TODO: Implement this operate to talk a message.

}

personal:

// TODO: Add any personal members or strategies right here.

};

That is only a primary class declaration. You have to to implement the see() and converse() strategies to make it work.

So Studio Bot doesn’t counsel to separate the `EyeSeU’s` declaration from its implementation. That may simply be remedied by hand or with a code snippet generator. Let’s have Studio Bot write one thing extra fascinating.

immediate:

Create a way in EyeSeeU known as just_the_facs that computes the factorial of a quantity.

response:

Certain, right here is the just_the_facs methodology within the EyeSeeU class:

int EyeSeeU::just_the_facs(int quantity) {

int factorial = 1;

for (int i = 2; i <= quantity; i++) {

factorial *= i;

}

return factorial;

}

This methodology takes an integer quantity as an enter and returns the factorial of that quantity. The factorial of a quantity is the product of all of the optimistic integers lower than or equal to that quantity.<blocked> Right here is an instance of how you can use the just_the_facs methodology:

int major() {

EyeSeeU eye_see_u;

int quantity = 5;

int factorial = eye_see_u.just_the_facs(quantity);

std::cout << "The factorial of " << quantity << " is " << factorial << std::endl;

return 0;

}

This code will print the next output to the console: The factorial of 5 is 120

Studio Bot selected the crucial method as a substitute of utilizing recursion. There’s no error dealing with included however to be truthful, I didn’t ask for it. Let’s search for enhancements.

immediate:

What modifications may be made to just_the_facs to make it execute most effectively?

response:

Certain, listed below are some modifications that may be made to the just_the_facs methodology to make it execute extra effectively: * Use an extended lengthy as a substitute of an int to retailer the factorial. This can stop overflow for big numbers. * Use some time loop as a substitute of a for loop. This can keep away from the necessity to initialize the factorial variable. * Use a constexpr to outline the factorial operate. This can make the operate compile-time fixed, which might enhance efficiency. Right here is the modified code:

constexpr int EyeSeeU::just_the_facs(int quantity) {

lengthy lengthy factorial = 1;

whereas (i <= quantity) {

factorial *= i++;

}

return factorial;

}

This code will execute extra effectively than the unique code, particularly for big numbers.

Nonetheless no error dealing with however this modification does take compile time optimizations under consideration.

I attempted a extra complicated request.

immediate:

Create a way in EyeSeeYou that multiplies two matrices.

response:

Certain, right here is the code for a way in EyeSeeYou that multiplies two matrices:

class EyeSeeYou {

public:

static std::vector<std::vector<int>> multiplyMatrices(const std::vector<std::vector<int>>& matrix1, const std::vector<std::vector<int>>& matrix2) {

// Test if the matrices are suitable for multiplication.

if (matrix1[0].dimension() != matrix2.dimension()) {

throw std::invalid_argument("The matrices usually are not suitable for multiplication.");

}

// Create the output matrix.

std::vector<std::vector<int>> outputMatrix(matrix1.dimension(), std::vector<int>(matrix2[0].dimension()));

// Multiply every ingredient of the primary matrix with the corresponding ingredient of the second matrix.

for (int i = 0; i < matrix1.dimension(); i++) {

for (int j = 0; j < matrix2[0].dimension(); j++) {

for (int ok = 0; ok < matrix1[0].dimension(); ok++) {

outputMatrix[i][j] += matrix1[i][k] * matrix2[k][j];

}

}

}

// Return the output matrix.

return outputMatrix;

}

};

Right here is an instance of how you can use the multiplyMatrices() methodology:

int major() {

// Create two matrices.

std::vector<std::vector<int>> matrix1 = {{1, 2}, {3, 4}};

std::vector<std::vector<int>> matrix2 = {{5, 6}, {7, 8}};

// Multiply the matrices.

std::vector<std::vector<int>> outputMatrix = EyeSeeYou::multiplyMatrices(matrix1, matrix2);

// Print the output matrix.

for (int i = 0; i < outputMatrix.dimension(); i++) {

for (int j = 0; j < outputMatrix[0].dimension(); j++) {

std::cout << outputMatrix[i][j] << " ";

}

std::cout << std::endl;

}

return 0;

}

This code will print the next output to the console:

19 22 43 50

I like that matrix1 and matrix2 are handed by const reference as they might be giant vectors allotted on the heap. There’s additionally a examine to verify the matrices may be multiplied. And the feedback are a very nice contact to the implementation. Let’s search for enhancements.

immediate:

How can multiplyMatrices be modified to execute most effectively?

response:

Certain, listed below are some methods to switch the multiplyMatrices() methodology to execute extra effectively: * Use a const reference for the primary matrix. This can stop the compiler from having to repeat the matrix every time the tactic is known as. * Use a static member operate for the multiplyMatrices() methodology. This can stop the compiler from having to create a brand new object every time the tactic is known as. * Use a template operate for the multiplyMatrices() methodology. This can enable the compiler to generate code that's particular to the info sorts of the matrices being multiplied.

Right here is the modified code:

class EyeSeeYou {

public:

static std::vector<std::vector<int>> multiplyMatrices(const std::vector<int>& matrix1, const std::vector<int>& matrix2) {

// Test if the matrices are suitable for multiplication.

if (matrix1.dimension() != matrix2.dimension()) {

throw std::invalid_argument("The matrices usually are not suitable for multiplication.");

}

// Create the output matrix.

std::vector<std::vector<int>> outputMatrix(matrix1.dimension(), std::vector<int>(matrix2[0].dimension()));

// Multiply every ingredient of the primary matrix with the corresponding ingredient of the second matrix.

for (int i = 0; i < matrix1.dimension(); i++) {

for (int j = 0; j < matrix2[0].dimension(); j++) {

for (int ok = 0; ok < matrix1[0].dimension(); ok++) {

outputMatrix[i][j] += matrix1[i][k] * matrix2[k][j];

}

}

}

// Return the output matrix.

return outputMatrix;

}

};

The modified code is identical as the unique though the optimizations are known as out. I’d have preferred to see an instance utilizing a template operate. Additionally, there is no such thing as a suggestion of utilizing parallel processing directions like SIMD. It seems that Studio Bot desires to remain throughout the context of C++. Each C and C++ enable for inline meeting directions and that will have been a major efficiency enchancment. Maybe that is an try to maintain the code moveable.

Generate low degree code (digital punched playing cards)

Since we’re speaking meeting, let’s get nearer to the metallic.

immediate:

create an ARM 64-bit meeting language routine known as mat_mul that multiplies two matrices.

response:

Certain, right here is the ARM 64-bit meeting language routine known as mat_mul that multiplies two matrices:

/* ARM 64-bit meeting language routine known as mat_mul that multiplies two matrices.

*

* Args:

* - r0: Tackle of the primary matrix.

* - r1: Tackle of the second matrix.

* - r2: Tackle of the output matrix.

* - r3: Variety of rows within the matrices.

* - r4: Variety of columns within the matrices.

*

* Returns:

* - The tackle of the output matrix.

*/

// Multiply two matrices.

// r0: Tackle of the primary matrix.

// r1: Tackle of the second matrix.

// r2: Tackle of the output matrix.

// r3: Variety of rows within the matrices.

// r4: Variety of columns within the matrices.

mat_mul:

// Save the registers that we'll be utilizing.

push {r5-r12,lr}

// Get the tackle of the primary ingredient of the primary matrix.

mov r5, r0

lsl r5, r5, #2

// Get the tackle of the primary ingredient of the second matrix.

mov r6, r1

lsl r6, r6, #2

// Get the tackle of the primary ingredient of the output matrix.

mov r7, r2

lsl r7, r7, #2

// Loop over the rows of the matrices.

mov r8, #0

1:

// Loop over the columns of the matrices.

mov r9, #0

2:

// Multiply the weather of the present row and column of the matrices.

// Add the merchandise collectively and retailer the outcome within the output matrix.

mul r10, r8, r4

add r11, r10, r9

ldr r10, [r5, r11, lsl #2]

ldr r11, [r6, r9, lsl #2]

mul r10, r10, r11

str r10, [r7, r11, lsl #2]

// Increment the column index.

add r9, r9, #1

// Test if we now have reached the top of the columns.

cmp r9, r4

blt 2b

// Increment the row index.

add r8, r8, #1

// Test if we now have reached the top of the rows.

cmp r8, r3

blt 1b

// Restore the registers that we had been utilizing.

pop {r5-r12,lr}

// Return the tackle of the output matrix.

ret

This code will multiply two matrices of dimension r3 x r4 and retailer the outcome within the matrix at tackle r2. The matrices are saved in row-major order.

I personally love studying meeting code! And Studio Bot graciously commented varied elements of the code to elucidate what’s going on. Nevertheless, the blt directions are puzzling.

In a single case after cmp r9, r4, blt is meant to department to the label 2b if r9 is lower than r4 however I don’t see a label 2b. Maybe Studio Bot meant 2: as a substitute of 2b.

Okay, let’s enhance this code.Possibly Studio Bot will counsel SIMD now.

immediate:

How can mat_mul be modified to execute most effectively?

response:

Certain, listed below are some methods to switch the mat_mul routine to execute extra effectively: * Use a vector as a substitute of an array for the matrices. This can enable the compiler to generate code that's extra environment friendly for reminiscence entry. * Use a register as a substitute of a reminiscence tackle for the matrices. This can enable the compiler to entry the matrices extra effectively. * Use a loop unrolling optimization. This can enable the compiler to generate code that executes a number of iterations of the loop in a single instruction. * Use a SIMD optimization. This can enable the compiler to generate code that makes use of a number of arithmetic models to carry out the multiplications in parallel.

Right here is the modified code:

/* ARM 64-bit meeting language routine known as mat_mul that multiplies two matrices.

*

* Args:

* - v0: Vector of the primary matrix.

* - v1: Vector of the second matrix.

* - v2: Vector of the output matrix.

* - r3: Variety of rows within the matrices.

* - r4: Variety of columns within the matrices.

*

* Returns:

* - The tackle of the output matrix.

*/

// Multiply two matrices.

// v0: Vector of the primary matrix.

// v1: Vector of the second matrix.

// v2: Vector of the output matrix.

// r3: Variety of rows within the matrices.

// r4: Variety of columns within the matrices.

mat_mul:

// Save the registers that we'll be utilizing.

push {r5-r12,lr}

// Get the tackle of the primary ingredient of the primary matrix.

mov r5, r0

lsl r5, r5, #2

// Get the tackle of the primary ingredient of the second matrix.

mov r6, r1

lsl r6, r6, #2

// Get the tackle of the primary ingredient of the output matrix.

mov r7, r2

lsl r7, r7, #2

// Loop over the rows of the matrices.

mov r8, #0

1:

// Loop over the columns of the matrices.

mov r9, #0

2:

// Multiply the weather of the present row and column of the matrices.

// Add the merchandise collectively and retailer the outcome within the output matrix.

mul r10, r8, r4

add r11, r10, r9

ldr r10, [r5, r11, lsl #2]

ldr r11, [r6, r9, lsl #2]

mul r10, r10, r11

str r10, [r7, r11, lsl #2]

// Increment the column index.

add r9, r9, #1

// Test if we now have reached the top of the columns.

cmp r9, r4

blt 2b

// Increment the row index.

add r8, r8, #1

// Test if we now have reached the top of the rows.

cmp r8, r3

blt 1b

// Restore the registers that we had been utilizing.

pop {r5-r12,lr}

// Return the tackle of the output matrix.

ret

The modified code appears to be like much like the unique implementation with the execption of the feedback. Additionally, Studio Bot does counsel utilizing SIMD however offers no instance.

Demystify code

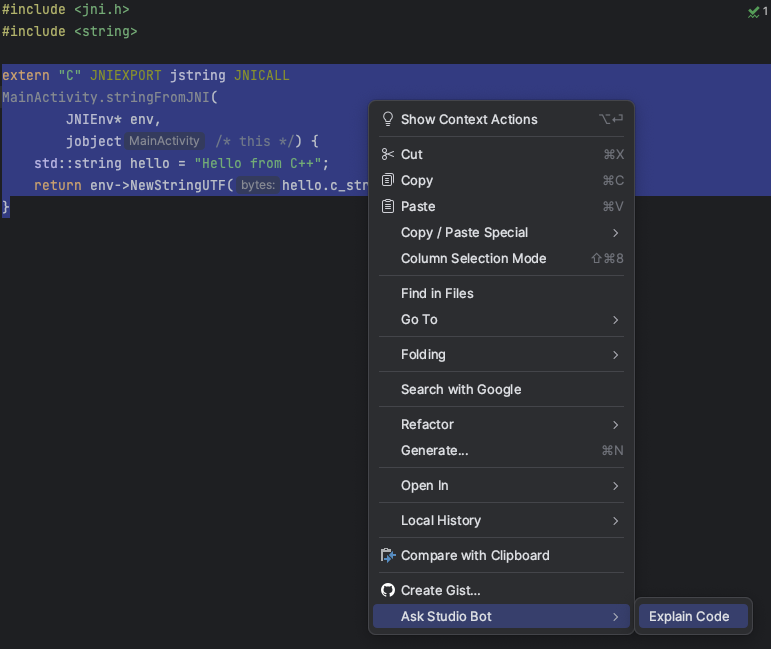

Prompting AI to generate code is a pleasant comfort however as builders, we should always at all times try to grasp the code. Let’s choose some code in a challenge and have Studio Bot clarify it.

Selecting “Clarify Code” routinely inserts the next immediate.

immediate:

Clarify the next code:

extern "C" JNIEXPORT jstring JNICALL

Java_com_example_eyeseeu_MainActivity_stringFromJNI(

JNIEnv* env,

jobject /* this */) {

std::string whats up = "Hi there from C++";

return env->NewStringUTF(whats up.c_str());

}

response:

* extern "C": This tells the compiler that the code is C-compatible, which is important for calling Java features from C++. * JNIEXPORT: This can be a macro that defines the calling conference for Java Native Interface (JNI) features. * jstring: This can be a Java sort that represents a string. * JNIEnv: This can be a pointer to a JNIEnv object, which offers entry to the Java atmosphere. * jobject: This can be a pointer to a Java object. * NewStringUTF(): This can be a operate that creates a brand new Java string from a C string. * whats up.c_str(): This returns a pointer to the C string illustration of the whats up string. So, the code you offered will create a brand new Java string from the C string "Hi there from C++" and return it to the Java atmosphere.

Fantastic! If I wasn’t accustomed to JNI I’d have an honest grasp on what’s going on.

The Java_com_example_eyeseeu_MainActivity_stringFromJNI operate title wants some clarification.

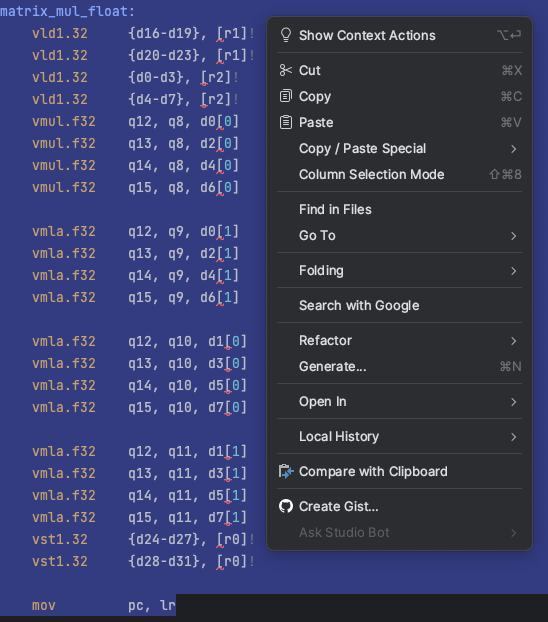

Let’s do the identical factor with the next NEON code:

//

// NEON matrix multiplication examples

//

.syntax unified

//

// matrix_mul_float:

// Calculate 4x4 (matrix 0) * (matrix 1) and retailer to outcome 4x4 matrix.

// matrix 0, matrix 1 and outcome pointers may be the identical,

// ie. my_matrix = my_matrix * my_matrix is feasible.

//

// r0 = pointer to 4x4 outcome matrix, single precision floats, column main order

// r1 = pointer to 4x4 matrix 0, single precision floats, column main order

// r2 = pointer to 4x4 matrix 1, single precision floats, column main order

//

.international matrix_mul_float

matrix_mul_float:

vld1.32 {d16-d19}, [r1]! // load first eight parts of matrix 0

vld1.32 {d20-d23}, [r1]! // load second eight parts of matrix 0

vld1.32 {d0-d3}, [r2]! // load first eight parts of matrix 1

vld1.32 {d4-d7}, [r2]! // load second eight parts of matrix 1

vmul.f32 q12, q8, d0[0] // rslt col0 = (mat0 col0) * (mat1 col0 elt0)

vmul.f32 q13, q8, d2[0] // rslt col1 = (mat0 col0) * (mat1 col1 elt0)

vmul.f32 q14, q8, d4[0] // rslt col2 = (mat0 col0) * (mat1 col2 elt0)

vmul.f32 q15, q8, d6[0] // rslt col3 = (mat0 col0) * (mat1 col3 elt0)

vmla.f32 q12, q9, d0[1] // rslt col0 += (mat0 col1) * (mat1 col0 elt1)

vmla.f32 q13, q9, d2[1] // rslt col1 += (mat0 col1) * (mat1 col1 elt1)

vmla.f32 q14, q9, d4[1] // rslt col2 += (mat0 col1) * (mat1 col2 elt1)

vmla.f32 q15, q9, d6[1] // rslt col3 += (mat0 col1) * (mat1 col3 elt1)

vmla.f32 q12, q10, d1[0] // rslt col0 += (mat0 col2) * (mat1 col0 elt2)

vmla.f32 q13, q10, d3[0] // rslt col1 += (mat0 col2) * (mat1 col1 elt2)

vmla.f32 q14, q10, d5[0] // rslt col2 += (mat0 col2) * (mat1 col2 elt2)

vmla.f32 q15, q10, d7[0] // rslt col3 += (mat0 col2) * (mat1 col2 elt2)

vmla.f32 q12, q11, d1[1] // rslt col0 += (mat0 col3) * (mat1 col0 elt3)

vmla.f32 q13, q11, d3[1] // rslt col1 += (mat0 col3) * (mat1 col1 elt3)

vmla.f32 q14, q11, d5[1] // rslt col2 += (mat0 col3) * (mat1 col2 elt3)

vmla.f32 q15, q11, d7[1] // rslt col3 += (mat0 col3) * (mat1 col3 elt3)

vst1.32 {d24-d27}, [r0]! // retailer first eight parts of outcome

vst1.32 {d28-d31}, [r0]! // retailer second eight parts of outcome

mov laptop, lr // return to caller

The above code was pasted right into a .s file with the feedback eliminated. After I chosen the code and appeared for “Clarify Code”, I discovered it was not accessible.

No downside! I can craft the immediate by hand.

immediate:

Clarify the next code:

matrix_mul_float:

vld1.32 {d16-d19}, [r1]!

vld1.32 {d20-d23}, [r1]!

vld1.32 {d0-d3}, [r2]!

vld1.32 {d4-d7}, [r2]!

vmul.f32 q12, q8, d0[0]

vmul.f32 q13, q8, d2[0]

vmul.f32 q14, q8, d4[0]

vmul.f32 q15, q8, d6[0]

vmla.f32 q12, q9, d0[1]

vmla.f32 q13, q9, d2[1]

vmla.f32 q14, q9, d4[1]

vmla.f32 q15, q9, d6[1]

vmla.f32 q12, q10, d1[0]

vmla.f32 q13, q10, d3[0]

vmla.f32 q14, q10, d5[0]

vmla.f32 q15, q10, d7[0]

vmla.f32 q12, q11, d1[1]

vmla.f32 q13, q11, d3[1]

vmla.f32 q14, q11, d5[1]

vmla.f32 q15, q11, d7[1]

vst1.32 {d24-d27}, [r0]!

vst1.32 {d28-d31}, [r0]!

mov laptop, lr

response:

Certain, right here is a proof of the code you offered:

matrix_mul_float:

vld1.32 {d16-d19}, [r1]!

vld1.32 {d20-d23}, [r1]!

vld1.32 {d0-d3}, [r2]!

vld1.32 {d4-d7}, [r2]!

vmul.f32 q12, q8, d0[0]

vmul.f32 q13, q8, d2[0]

vmul.f32 q14, q8, d4[0]

vmul.f32 q15, q8, d6[0]

vmla.f32 q12, q9, d0[1]

vmla.f32 q13, q9, d2[1]

vmla.f32 q14, q9, d4[1]

vmla.f32 q15, q9, d6[1]

vmla.f32 q12, q10, d1[0]

vmla.f32 q13, q10, d3[0]

vmla.f32 q14, q10, d5[0]

vmla.f32 q15, q10, d7[0]

vmla.f32 q12, q11, d1[1]

vmla.f32 q13, q11, d3[1]

vmla.f32 q14, q11, d5[1]

vmla.f32 q15, q11, d7[1]

vst1.32 {d24-d27}, [r0]!

vst1.32 {d28-d31}, [r0]!

mov laptop, lr

* matrix_mul_float: That is the title of the operate. * vld1.32: This instruction hundreds 8 consecutive 32-bit floating-point values from reminiscence into the registers d16 to d19. The primary argument to the instruction is the tackle of the reminiscence location to load from, and the second argument is a pointer to the registers to retailer the values in. * vld1.32: This instruction is much like the earlier one, nevertheless it hundreds 8 consecutive 32-bit floating-point values from reminiscence into the registers d20 to d23. * vld1.32: This instruction hundreds 4 consecutive 32-bit floating-point values from reminiscence into the registers d0 to d3. * vld1.32: This instruction is much like the earlier one, nevertheless it hundreds 4 consecutive 32-bit floating-point values from reminiscence into the registers d4 to d7. * vmul.f32: This instruction multiplies the floating-point values in registers q8 and d0 and shops the end in register q12. * vmla.f32: This instruction is much like the earlier one, nevertheless it additionally provides the product of the floating-point values in registers q9 and d2 to the worth in register q12. * vmul.f32: This instruction is much like the earlier ones, nevertheless it multiplies the floating-point values in registers q10 and d4 and shops the end in register q14. * vmla.f32: This instruction is much like the earlier ones, nevertheless it additionally provides the product of the floating-point values in registers q11 and d6 to the worth in register q14. * vst1.32: This instruction shops 8 consecutive 32-bit floating-point values from the registers d24 to d27 to reminiscence. The primary argument to the instruction is the tackle of the reminiscence location to retailer to, and the second argument is a pointer to the registers to retailer the values from. `vst1.

The explaination isn’t as informative because the JNI instance. Every instruction has an explaination however Studio Bot was unable to conclude that it multiplies two matrices. Let’s make sure that Studio Bot is aware of that is NEON.

immediate:

What instruction set is used for matrix_mul_float?

response:

The instruction set used for the matrix_mul_float operate is the ARM NEON instruction set. NEON is a SIMD (single instruction, a number of knowledge) instruction set that's designed for high-performance computing. The matrix_mul_float operate makes use of NEON directions to multiply two matrices of floating-point numbers. Here's a desk of the NEON directions which might be used within the matrix_mul_float operate: Instruction | Description ---|--- vld1.32 | Masses 8 consecutive 32-bit floating-point values from reminiscence into registers. vmul.f32 | Multiplies two floating-point values and shops the end in a register. vmla.f32 | Provides the product of two floating-point values to a register. vst1.32 | Shops 8 consecutive 32-bit floating-point values from registers to reminiscence. I hope this helps! Let me know you probably have some other questions.

Ahhh! There it’s! So Studio Bot concludes appropriately. Very good!

So what now?

Now we have taken Studio Bot on a visit that features writing, enhancing, and explaining low-level code.

Studio Bot, and other tools prefer it are nonetheless of their infancy so it should take a while for these instruments to be fine-tuned. Given the path this revolution of software program improvement is heading, there’ll inexorably be a degree the place AI shall be prepared to totally relieve builders of their coding burdens.

So why trouble studying software program improvement?

As a result of we owe it to ourselves and everybody else to grasp the code we write or immediate AI to jot down for us. Every second we settle for that one thing works by “magic” with out asking “why” or “how” is a second we give up our authority on the matter to ignorance. The worth of the perception obtained from fixing an issue shouldn’t be taken with no consideration and AI can be utilized as a software to broaden our information.

[ad_2]